Implementing a true zero-copy architecture

6min • Last updated on Feb 5, 2026

Olivier Renard

Content & SEO Manager

[👉 Summarise this article using ChatGPT, Google AI or Perplexity.]

The volume of digital data continues to grow at an unprecedented pace. This year, global data production is expected to reach 221 zettabytes (IDC / Statista), up 27% year over year.

Within organisations, an average of 106 SaaS tools are used daily (BetterCloud). Data flows across countless systems, copies proliferate, and governance becomes increasingly complex.

These challenges are pushing companies to rethink how data moves across their ecosystems and to modernise their data stacks.

Key Takeaways:

Zero-copy refers to using data without duplicating it. The concept exists both in computer programming and in data architecture.

In a data stack, the goal is to avoid data duplication across tools and systems.

This approach has a direct impact on governance, costs, data freshness, and activation performance.

Applied to a CDP, it means relying on the data warehouse or data lake as the system of record. The warehouse-native model stands apart from the “no-copy” claims made by traditional CDPs.

👉 What is zero-copy architecture, and why is it gaining so much attention? Discover the fundamentals and real-world applications of this approach. 🔍

What is a zero-copy architecture?

Zero-copy architecture refers to using data where it already lives, without duplicating it.

Tools access and leverage data from a single source of truth, without copying it into their own databases.

In computing, zero-copy originally describes memory-optimisation mechanisms. In the context of a Modern Data Stack, it refers to an architectural principle: bringing use cases to the data, rather than moving data to each use case.

In practice, data remains in a central layer—most often a cloud data warehouse. Analytics tools, activation platforms and business applications query and use this data directly, without creating additional copies in their own systems

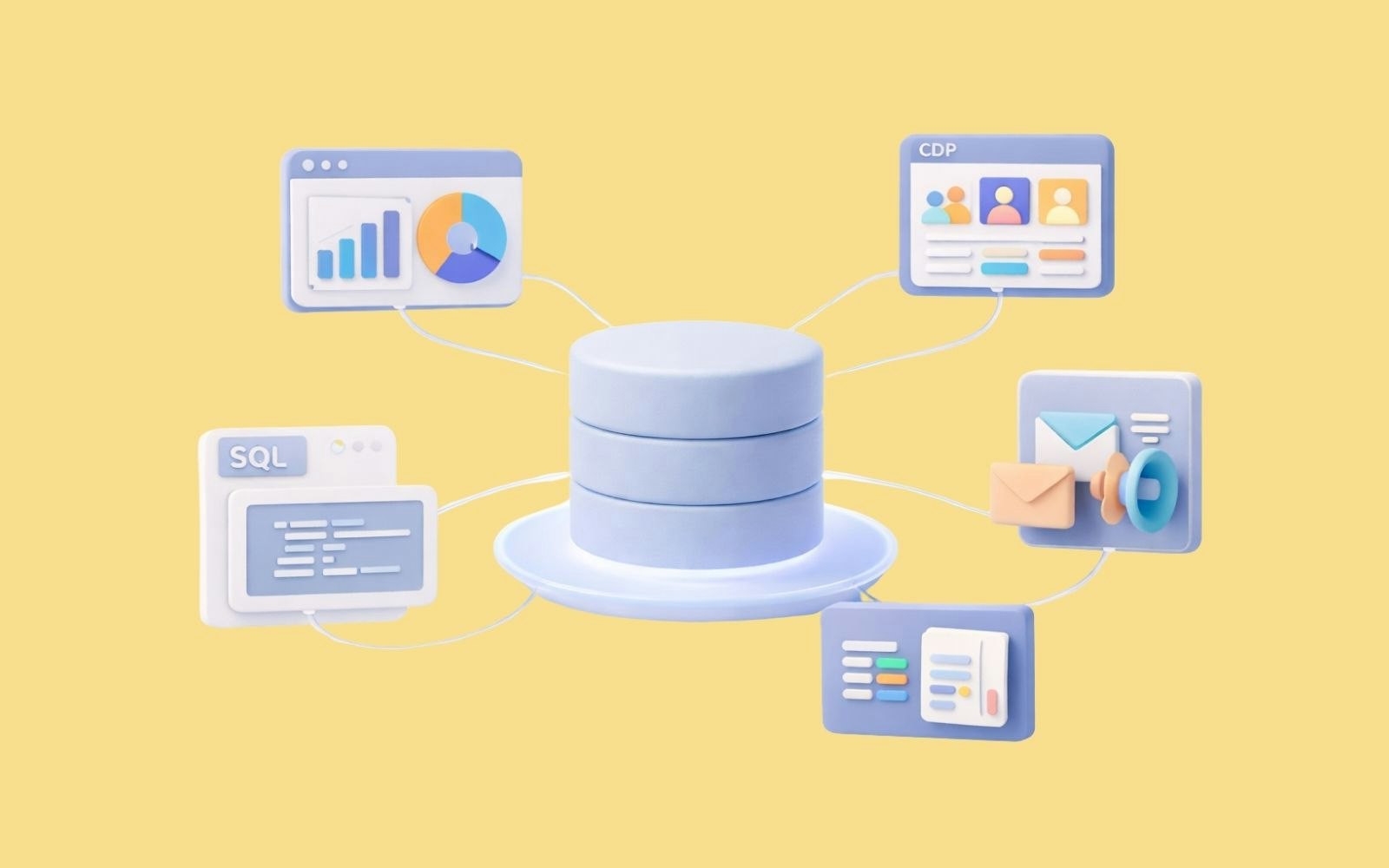

How this differs from traditional architectures

Traditional data architectures still largely rely on a “copy-to-serve” model. Data is duplicated during ingestion, transformation, or when it is consumed by business applications (BI tools, marketing platforms, etc.).

This approach leads to higher storage costs, increased latency, multiple versions of the truth across systems, and growing integration debt over time.

Traditional CDPs duplicate data, negating the concept of "Single Source of Truth"

Use cases

Analytics & BI: teams analyse data directly from the warehouse, without exporting it to intermediate databases.

Omnichannel activation: segments and audiences are built on warehouse data and then activated across marketing channels.

Real-time personalisation: applications consume up-to-date data to adapt messages, content or offers.

Partner data sharing: data can be made available to external partners through sharing mechanisms (e.g. data clean rooms), without physical duplication.

These examples all illustrate the same principle: simplifying data flows and bringing use cases closer to the data, without multiplying copies.

Principles and implementation in a Modern Data Stack

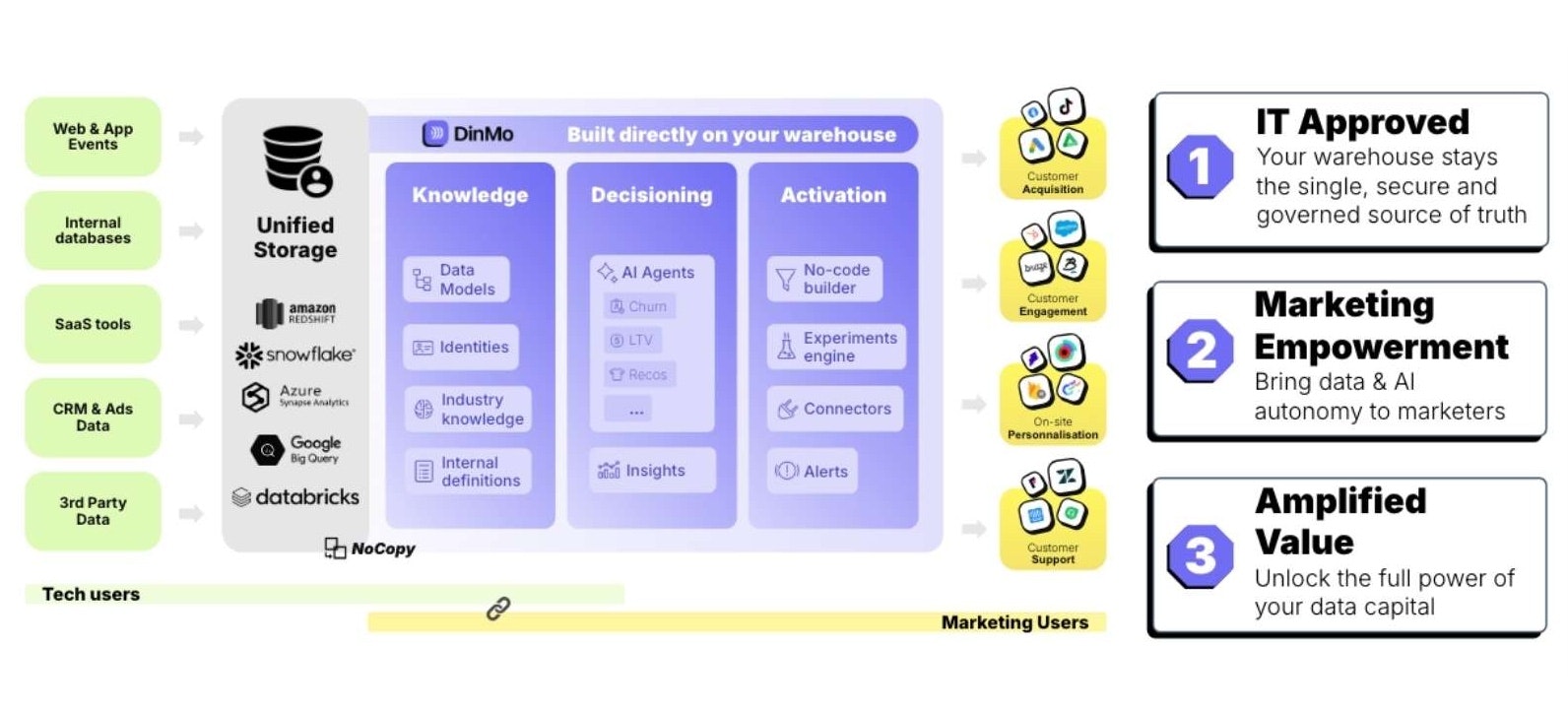

A zero-copy architecture is based on the idea that data remains in a central repository, and that use cases come to the data. In a Modern Data Stack, this foundation is a cloud data warehouse, which centralises customer, product, transactional and behavioural data.

The tools in the stack (analytics, marketing, applications, data science) do not each create their own database. Instead, they rely on the warehouse to read and use the data.

The stack is designed to access data at the source and query existing tables. Key mechanisms include data sharing, external tables/views, and federated queries, among others.

From a business perspective, this limits inconsistencies between systems. Business definitions are shared: what is visible in BI is exactly what is used for activation.

Turning data & AI into everyday marketing tools

Benefits and limitations

Key benefits include:

Fresher and more secure data,

Simplified governance and improved compliance,

Faster time-to-value,

Fewer integration pipelines.

Some limitations to be aware of:

Greater dependency on the compute engine,

Potentially slight latency for certain specific use cases,

Some situations still require data to be copied (e.g. legacy tools).

When should you favour a zero-copy architecture?

A zero-copy architecture is particularly well suited when use cases multiply—from analytics to marketing activation, including scoring and data sharing. It is especially valued by organisations facing strong security or compliance requirements.

Above all, this type of infrastructure aims to simplify data flows and reduce the proliferation of silos.

Zero-copy architecture and customer data

Customer data has specific characteristics. By nature, it is high-volume and constantly evolving, used by multiple teams and subject to strict regulations.

To create value, organisations need to unify their customer data, then segment it accurately in order to activate it across multiple channels and measure performance.

In a Modern Data Stack, these use cases rely on the data warehouse. Direct access makes it possible to work with customer data where it lives, without multiplying databases.

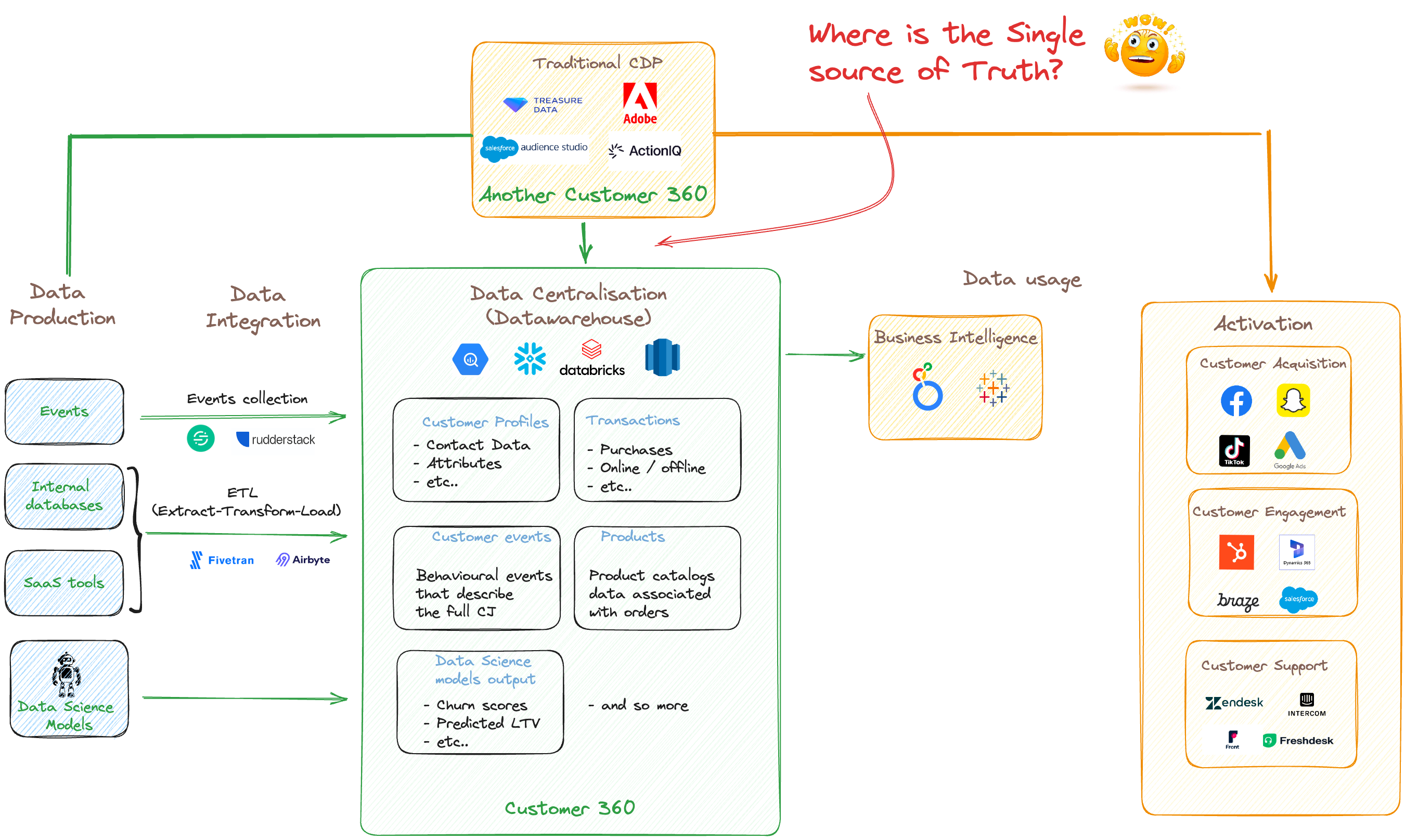

Composable CDPs: beyond the “no-copy” marketing narrative

Many legacy CDPs from the MarTech ecosystem now promote a “no-copy” approach.

In practice, this usually means:

A connection to the data warehouse,

The selection of a subset of profiles and key attributes,

Followed by replication into their own database.

In other words, the warehouse feeds a proprietary internal model. A partial copy of the data is still maintained on the vendor side.

This approach imposes a predefined data model and requires ongoing maintenance of mappings. It relies on parallel storage and increases the risk of vendor lock-in.

The composable approach, or zero-copy by design

A warehouse-native composable CDP follows a different logic. It relies directly on the company’s data warehouse, without storing data on the vendor’s infrastructure or enforcing a predefined model.

All computations run within the warehouse, and segments, scores and activation logs are also written back there. The data warehouse becomes the single source of truth: what you see in BI is exactly what is used for activation.

This approach avoids creating a second source of truth. There are no discrepancies between tools—teams work from the same tables and the same business rules.

Criteria | Composable (warehouse-native) | “No-copy” (CDP traditionnelles) |

|---|---|---|

Vendor data storage | ✅ None | Vendor-side storage (replicated profiles / attributes) |

Data model | Not imposed; reads the existing customer model | ⚠️ Imposed; mapping to a proprietary schema |

Source of truth | Single (CDW) ; BI ↔ Activation aligned | ⚠️ Dual (CDW → vendor store); risk of discrepancies |

Governance & GDPR | Reduced scope (no third-party replication) | Broader scope (two databases to govern). PII replicated to a third party |

Performance | Computation in the DWH (elasticity, MPP) | Re-materialisations, indexes, caches on the vendor side |

Time-to-value | ✅ Fast; no forced remapping | ⚠️ Latency due to batch jobs, recurring remapping (integration debt) |

Costs | CDW only (pay-as-you-go) | CDW + vendor storage / compute |

Scalability | ✅ Unconstrained (new tables / fields without friction) | Dependent on the vendor schema (friction with every change) |

In other words, a zero-copy architecture applied to customer data is not about copying less. It is about aligning BI, data and marketing activation around the data warehouse, without recreating a parallel customer database.

Conclusion

More than a marketing slogan, zero-copy is first and foremost an architectural choice. Its goal is to simplify how data flows across the stack, without creating a parallel source of truth.

The trend is moving towards open, warehouse-centric ecosystems. Composable systems are becoming the norm, driven by the widespread adoption of cloud data warehouses.

👉 Discover how DinMo integrates with your data stack to launch your first use cases in under an hour.

FAQ

Zero-copy and performance: what about processing times?

Zero-copy and performance: what about processing times?

With “no-copy” approaches, batch latency is common (hourly or daily). This is caused by synchronisation pipelines and the re-materialisations required to populate the vendor’s internal store.

With a composable approach, the data warehouse remains the engine: fewer points of friction, fresher data, and results that can be much closer to real time, depending on your use cases.

Can you combine both approaches?

Can you combine both approaches?

Technically, yes. But you will reintroduce the limitations of “no-copy”: dual sources of truth, higher costs, and additional latency caused by synchronisation.

The value of a composable approach lies precisely in avoiding this structural duplication and keeping an infrastructure that is simpler to operate over time.

Zero-copy and GDPR: what are the benefits?

Zero-copy and GDPR: what are the benefits?

True zero-copy supports compliance with data protection regulations. Personal data stays in the data warehouse, which simplifies governance, access controls and deletion processes.

It also limits the replication of PII into third-party tools and makes it easier to comply with GDPR requirements.